By Bob Johnston, CEO

Every IT leader has a scar from a failed implementation. The common culprit? Treating quality like a checklist instead of a mindset. Too often, organizations still treat software quality as someone else’s job — an optional add-on rather than a foundation. This mindset leads to costly failures, missed opportunities, and unpredictable outcomes. In other words, it’s risky.

Poorly specified software configurations and implementations are especially prone to failure. When business requirements are vague, incomplete, or misunderstood, the resulting systems often fail to deliver the intended value. These failures are not just technical; they’re strategic. They erode trust, inflate budgets, and delay critical initiatives.

But this risk is avoidable.

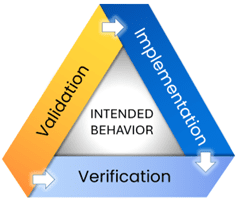

Validation: Modeling Intended Behavior

The journey to high-quality software begins with validation, ensuring that the business requirements truly reflect the intended behavior of the system being deployed.

The smartest teams don’t start configuring or customizing — they start modeling.

AI-generated content may be incorrect. This means engaging stakeholders and vendors early and using modeling techniques such as:

- Process modeling

- Use case diagrams

- Business rule modeling

- Decision tables, data flow diagrams, and more

These tools help clarify expectations, uncover hidden assumptions, and align teams around a shared vision. Validation is not just documentation, it’s a collaborative process that ensures the “right” problem is being solved.

Pro Tip: A two-hour validation session today can save a two-month rework cycle tomorrow.

Verification: Testing Delivered Behavior

Once implementation begins, the focus shifts to verification — confirming that the delivered software and interfaces behave as intended.

Modern testing methods and technologies make this process faster, smarter, and more reliable:

- Requirements Traceability Matrix

- Automated test generation

- AI-assisted testing

- Model-based testing

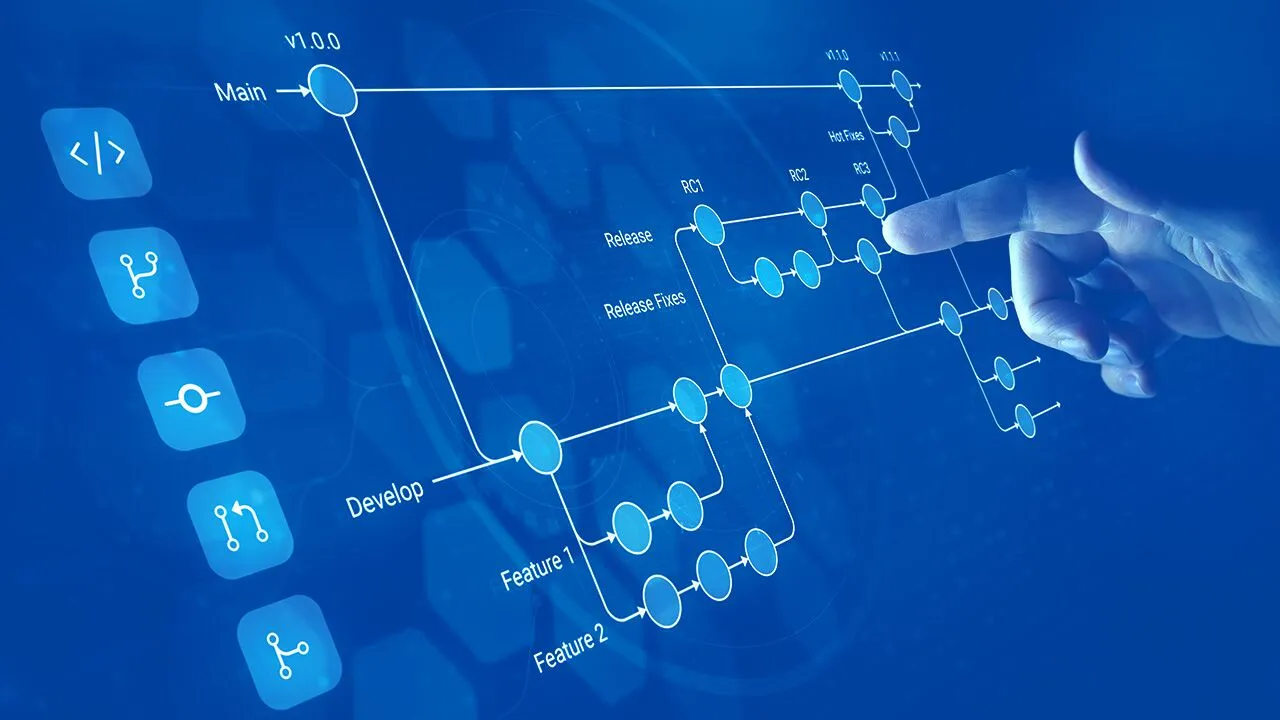

- Continuous integration and testing pipelines

These practices ensure that defects are caught early, regression risks are minimized, and quality is built into the software — not bolted on afterward.

Quality isn’t a phase. It’s a decision.

IQM: A Smarter Approach

Together, validation and verification form the foundation of Intelligent Quality Management (IQM), a disciplined approach to software quality that integrates business intent with technical execution.

At Critical Logic, we champion IQM because it embeds quality thinking into every step — from requirements to release.

IQM treats quality as a strategic enabler, not a cost center. It reduces the risk of failure, enhances business outcomes, and delivers value at a predictable cost.

Conclusion: Quality Is a Business Imperative

Software quality is not optional. It’s the difference between success and failure, between innovation and stagnation.

In an era where digital transformation is non-negotiable, poor-quality costs more than ever — in trust, time, and talent.

By embracing IQM and investing in smart validation and verification practices, organizations can build systems that work — systems that deliver on their promise and drive real business success.

Quality isn’t optional — it’s your strategy. Measure twice. Cut once. Build right.